系统版本:centos7.6,loki版本:2.7.1,minio:2021-11-24T23:19:33Z

环境介绍:

| 服务器 | 角色 | 备注 |

|---|---|---|

| 172.20.20.231 | loki-read-1 | loki读节点1 |

| 172.20.20.232 | loki-read-2 | loki读节点2 |

| 172.20.20.233 | loki-write-1 | loki写节点1 |

| 172.20.20.234 | loki-write-2 | loki写节点2 |

| 172.20.20.235 | promtail-1 nginx | promtail日志收集1,nginx服务器1 |

| 172.20.20.236 | promtail-2 nginx | promtail日志收集2,nginx服务器2 |

| 172.20.20.164 | minio-1 | 存储1 |

| 172.20.20.165 | minio-2 | 存储2 |

| 172.20.20.161 | nginx | 反代 |

loki下载地址:https://github.com/grafana/loki/tags

loki版本很重要,这里一定要重视,loki和promtail版本一定要相同,loki从2.4以后各版本之间配置文件还是有点差别的,所以这里坑我先踩为敬,版本很重要

如果你的环境中nginx一天的日志量没有超过100G,可以不使用这种方式,loki单节点可以扛住100G/天的日志量,但我这里的环境一天的日志量大概在300G左右,并且还在不断增加,所以多节点已经是必须的了。

网上看了很多关于loki分布式部署的文档,包括官方文档在内,没有一个可以把多节点部署讲清楚的,这个东西目前用的人不多,官方文档对关键配置也是一笑而过,这里我也没有把这个东西完全吃透,所以先上个我已经部署起来正在使用的配置来做个记录

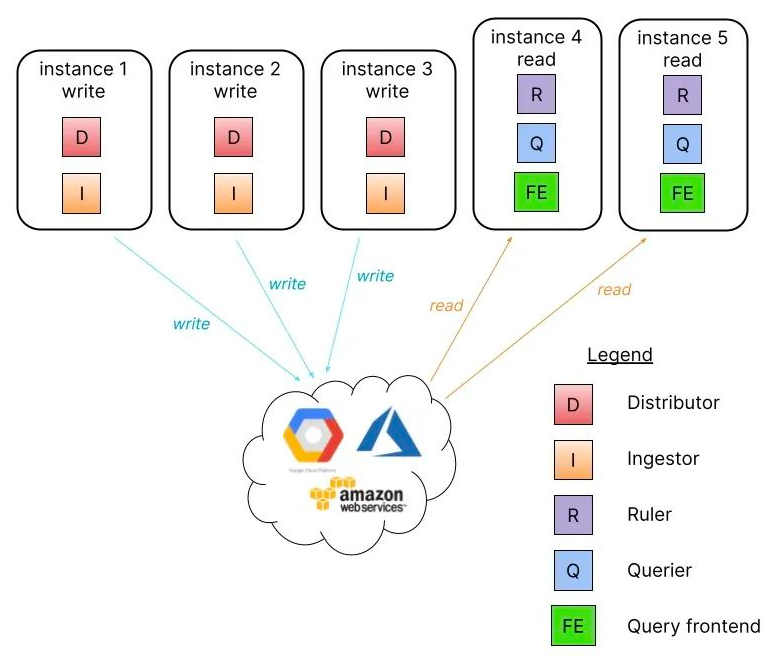

从网上偷个图,loki读写分离的架构图,不要被那些组件吓到了

首先单节点的部署方式之前已经写过了,可以在本站找一下,下面直接上配置

1、loki配置

读节点配置:

auth_enabled: false

#读节点

target: read

http_prefix:

server:

http_listen_address: 0.0.0.0

grpc_listen_address: 0.0.0.0

http_listen_port: 3100

grpc_listen_port: 9095

log_level: info

common:

storage:

s3:

endpoint: 172.20.20.165:9000

insecure: true

bucketnames: loki

access_key_id: Admin

secret_access_key: 1234#abcd

s3forcepathstyle: true

compactor_address: http://172.20.20.161:3100

memberlist:

join_members: ["172.20.20.231", "172.20.20.232", "172.20.20.233", "172.20.20.234"]

dead_node_reclaim_time: 30s

gossip_to_dead_nodes_time: 15s

left_ingesters_timeout: 30s

bind_addr: ['0.0.0.0']

bind_port: 7946

gossip_interval: 2s

ingester:

lifecycler:

join_after: 10s

observe_period: 5s

ring:

replication_factor: 1

kvstore:

store: memberlist

final_sleep: 0s

chunk_idle_period: 1m

wal:

enabled: true

dir: /data/loki/wal

max_chunk_age: 1m

chunk_retain_period: 30s

chunk_encoding: snappy

chunk_target_size: 1.572864e+06

chunk_block_size: 262144

flush_op_timeout: 10s

schema_config:

configs:

- from: 2020-08-01

store: boltdb-shipper

object_store: s3

schema: v11

index:

prefix: index_

period: 24h

storage_config:

boltdb_shipper:

shared_store: s3

active_index_directory: /data/loki/index

cache_location: /data/loki/tmp/boltdb-cache

limits_config:

max_cache_freshness_per_query: '10m'

enforce_metric_name: false

reject_old_samples: true

reject_old_samples_max_age: 30m

ingestion_rate_mb: 10

ingestion_burst_size_mb: 20

# parallelize queries in 15min intervals

split_queries_by_interval: 15m

chunk_store_config:

max_look_back_period: 336h

table_manager:

retention_deletes_enabled: true

retention_period: 336h

query_range:

# make queries more cache-able by aligning them with their step intervals

align_queries_with_step: true

max_retries: 5

parallelise_shardable_queries: true

cache_results: true

frontend:

log_queries_longer_than: 5s

compress_responses: true

max_outstanding_per_tenant: 2048

query_scheduler:

max_outstanding_requests_per_tenant: 1024

querier:

query_ingesters_within: 2h

compactor:

working_directory: /data/loki/tmp/compactor

shared_store: s3

写节点配置:

auth_enabled: false

target: write

http_prefix:

server:

http_listen_address: 0.0.0.0

grpc_listen_address: 0.0.0.0

http_listen_port: 3100

grpc_listen_port: 9095

log_level: info

common:

storage:

s3:

endpoint: 172.20.20.165:9000

insecure: true

bucketnames: loki

access_key_id: Admin

secret_access_key: 1234#abcd

s3forcepathstyle: true

compactor_address: http://172.20.20.161:3100

memberlist:

join_members: ["172.20.20.231", "172.20.20.232", "172.20.20.233", "172.20.20.234"]

dead_node_reclaim_time: 30s

gossip_to_dead_nodes_time: 15s

left_ingesters_timeout: 30s

bind_addr: ['0.0.0.0']

bind_port: 7946

gossip_interval: 2s

ingester:

lifecycler:

join_after: 10s

observe_period: 5s

ring:

replication_factor: 1

kvstore:

store: memberlist

final_sleep: 0s

chunk_idle_period: 1m

wal:

enabled: true

dir: /data/loki/wal

max_chunk_age: 1m

chunk_retain_period: 30s

chunk_encoding: snappy

chunk_target_size: 1.572864e+06

chunk_block_size: 262144

flush_op_timeout: 10s

schema_config:

configs:

- from: 2020-08-01

store: boltdb-shipper

object_store: s3

schema: v11

index:

prefix: index_

period: 24h

storage_config:

boltdb_shipper:

shared_store: s3

active_index_directory: /data/loki/index

cache_location: /data/loki/tmp/boltdb-cache

limits_config:

max_cache_freshness_per_query: '10m'

enforce_metric_name: false

reject_old_samples: true

reject_old_samples_max_age: 30m

ingestion_rate_mb: 10

ingestion_burst_size_mb: 20

# parallelize queries in 15min intervals

split_queries_by_interval: 15m

chunk_store_config:

max_look_back_period: 336h

table_manager:

retention_deletes_enabled: true

retention_period: 336h

query_range:

# make queries more cache-able by aligning them with their step intervals

align_queries_with_step: true

max_retries: 5

parallelise_shardable_queries: true

cache_results: true

frontend:

log_queries_longer_than: 5s

compress_responses: true

max_outstanding_per_tenant: 2048

query_scheduler:

max_outstanding_requests_per_tenant: 1024

querier:

query_ingesters_within: 2h

compactor:

working_directory: /data/loki/tmp/compactor

shared_store: s3

读和写的配置文件区别就在这里:target: read和target: write

2、nginx代理配置

upstream read {

server 172.20.20.231:3100;

server 172.20.20.232:3100;

}

upstream write {

server 172.20.20.233:3100;

server 172.20.20.234:3100;

}

upstream cluster {

server 172.20.20.231:3100;

server 172.20.20.232:3100;

server 172.20.20.233:3100;

server 172.20.20.234:3100;

}

upstream query-frontend {

server 172.20.20.231:3100;

server 172.20.20.232:3100;

}

server {

listen 80;

listen 3100;

location = /ring {

proxy_pass http://cluster$request_uri;

}

location = /memberlist {

proxy_pass http://cluster$request_uri;

}

location = /config {

proxy_pass http://cluster$request_uri;

}

location = /metrics {

proxy_pass http://cluster$request_uri;

}

location = /ready {

proxy_pass http://cluster$request_uri;

}

location = /loki/api/v1/push {

proxy_pass http://write$request_uri;

}

location = /loki/api/v1/tail {

proxy_pass http://read$request_uri;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

}

location ~ /loki/api/.* {

proxy_pass http://query-frontend$request_uri;

}

}

3、promtail配置

server:

http_listen_port: 9080

grpc_listen_port: 0

positions:

filename: /tmp/positions.yaml

#填写nginx地址

clients:

- url: http://172.20.20.161:3100/loki/api/v1/push

scrape_configs:

- job_name: nginx

pipeline_stages:

- replace:

expression: '(?:[0-9]{1,3}\.){3}([0-9]{1,3})'

replace: '***'

static_configs:

- targets:

- localhost

labels:

job: nginx_access_log

host: 20.235

agent: promtail

__path__: /data/openresty/nginx/logs/access.log

4、minio配置

#!/bin/bash

export MINIO_ACCESS_KEY=Admin

export MINIO_SECRET_KEY=1234#abcd

#export MINIO_PROMETHEUS_AUTH_TYPE=public #允许prometheus监控

/data/minio/minio server --config-dir /data/minio/config --console-address ":9001" \

http://172.20.20.165/data/minio/images \

http://172.20.20.165/data/minio/images2 \

http://172.20.20.166/data/minio/images \

http://172.20.20.166/data/minio/images2 \

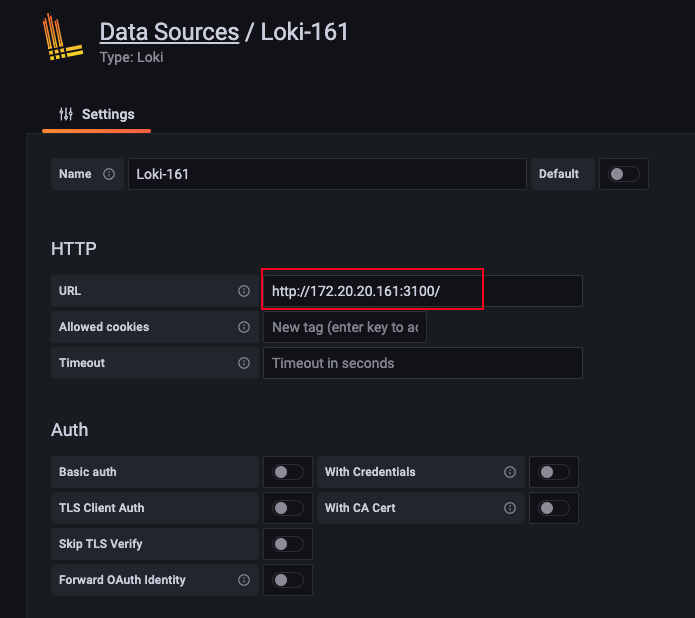

5、grafana配置

grafana使用现成的,在添加loki数据源的时候填写nginx的地址

最后面一定要加/结尾

娃哈哈好喝

娃哈哈好喝